- Create Web Services DataSource in BI system. When you will activate Web Service DataSource it will create Web Service/RFC FM automatically for you. (/BIC/CQFI_GL_00001000)

- Create transformation on Data Target (DSO) while taking Web Service DataSource as source of transformation.

- Create DTP on Data Target by selecting DTP type as ‘DTP for Real-Time Dara Acquisition’

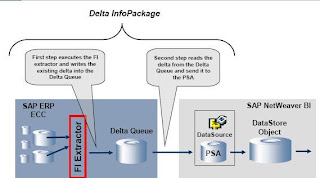

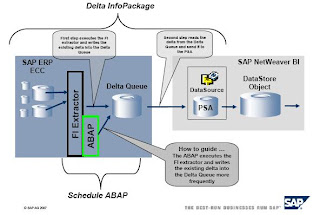

- Create InfoPackage. (When you create InfoPackage for Web Services DataSource it will automatically enable Real-Time field for you, but if when you create it for SAP Source System DataSource you have to enable Real-Time field while creating InfoPackage, if your DataSource supports RDA)

- In the processing tab of InfoPackage we enter the maximum time (Threshold value) for each request to open. Once that limit is cross RDA creates new request. The data is updated into data target ASAP it comes from Source System (~ 1 min), even though request will be open to take new record.

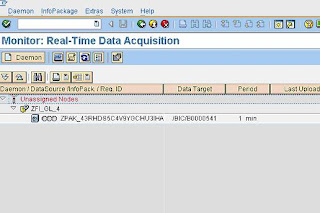

6. Click on Assign window(schedule tab) to go to RDA Monitor. (You can also go to RDA Monitor using TCode RSRDA)

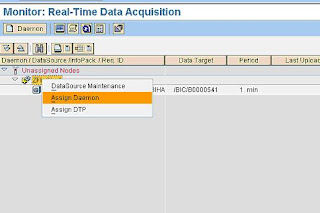

7. Assign a new Daemon for DataSource from Unassigned node. (Required to start the Daemon)

8. Assign the DTP to newly created Daemon.

9. Call RFC from the Source System, which got created when we created DataSource. Check Appendix for creating test FM to call RFC from (ZTEST_BW) Source System.

10. Under RDA Monitor or PSA table now you can check 1 record under Open Request.

When you call RFC from Source System it will take ~ one minute to load it to PSA of DataSource. Once the record will come to PSA Table, RDA Daemon will create new open request for DTP, and update the data into Data Target at the same time.

11. Close the Request. You can manually close the request also, it will create new request for the same InfoPackage. It is required for performance reason even though it’s not a mandatory step.

12. Stop the Daemon Load. Even though the Daemon will run under sleep mode all the time, and once the request will come from source system it will start working automatically. In General practice we don’t need to close the Daemon, but if it is required by any chance you can.

13. AppendixCreate Test FM to call the RFC in BI system. (I am using RFC for testing purpose you can also use Web Service)Below is the FM that will get created automatically on BI side when we activate Web Services DataSource.

9. Start the Daemon.

10. Once you start the Daemon, you can check Open Request in PSA of DataSource or in RDA Monitor under InfoPackage also.

It takes Import parameter as a Table Type, which is linked to Line Type Structure.