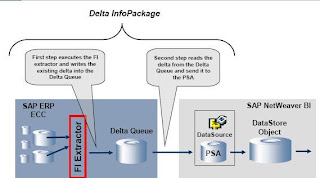

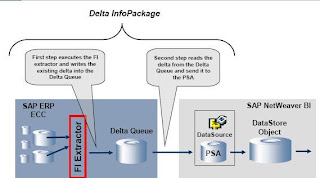

When a Delta InfoPackage for the DataSource 0FI_GL_4 is executed in SAP NetWeaver

BI (BI), the extraction process in the ECC source system mainly consists of two activities:

- First the FI extractor calls a FI specific function module which reads the new and

changed FI documents since the last delta request from the application tables

and writes them into the Delta Queue.

- Secondly, the Service API reads the delta from the Delta Queue and sends the FI

documents to BI.

The time consuming step is the first part. This step might take a long time to collect all

the delta information, if the FI application tables in the ECC system contain many entries

or when parallel running processes insert changed FI documents frequently.

A solution might be to execute the Delta InfoPackage to BI more frequently to process

smaller sets of delta records. However, this might not be feasible for several reasons:

First, it is not recommended to load data with a high frequency using the normal

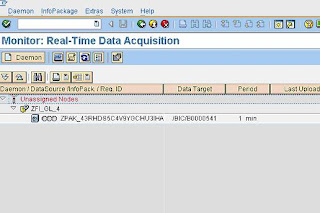

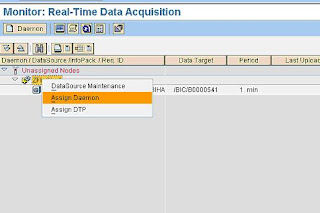

extraction process into BI. Second, the new Real-Time Data Acquisition (RDA)

functionality delivered with SAP NetWeaver 7.0 can only be used within the new

Dataflow. This would make a complete migration of the Dataflow necessary. Third, as of

now the DataSource 0FI_GL_4 is not officially released for RDA.

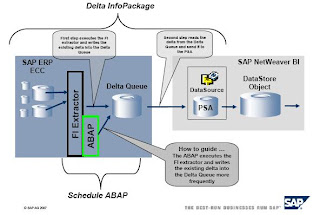

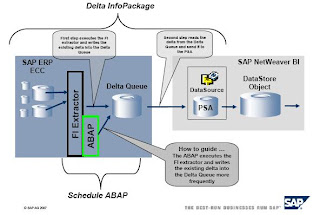

To be able to process the time consuming first step without executing the delta

InfoPackage the ABAP report attached to this document will execute the first step of the

extraction process encapsulated. The ABAP report reads all the new and changed

documents from the FI tables and writes them into the BI delta queue. This report can be

scheduled to run frequently, e.g. every 30 minutes.

The Delta InfoPackage can be scheduled independently of this report. Most of the delta

information will be read from the delta queue then. This will greatly reduce the number of

records the time consuming step (First part of the extraction) has to process from the FI

application as shown in the picture below.

The Step By Step Solution

4.1 Implementation Details

To achieve an encapsulated first part of the original process, the attached ABAP report is

creating a faked delta initialization for the logical system 'DUMMY_BW'. (This system can

be named anything as long as it does not exist.) This will create two delta queues for the

0FI_GL_4 extractor in the SAP ERP ECC system: One for the ‘DUMMY_BW’ and the

other for the 'real' BI system.

The second part of the report is executing a delta request for the ‘DUMMY_BW’ logical

system. This request will read any new or changed records since the previous delta

request and writes them into the delta queues of all connected BI systems.

The reason for the logical BI system ‘DUMMY_BW’ is that the function module used in

the report writes the data into the Delta Queue and marks the delta as already sent to

the ‘DUMMY_BW’ BI system.

This is the reason why the data in the delta queue of the ‘DUMMY_BW’ system is not

needed for further processing. The data gets deleted in the last part of the report.

The different delta levels for different BI systems are handled by the delta queue and are

independent from the logical system.

Thus, the delta is available in the queue of the 'real' BI system, ready to be sent during

the next Delta InfoPackage execution.

This methodology can be applied to any BI extractors that use the delta queue

functionality.

As this report is using standard functionality of the Plug-In component, the handling of

data request for BI has not changed. If the second part fails, it can be repeated. The

creation & deletion of delta-initializations is unchanged also.

The ABAP and the normal FI extractor activity reads delta sequential. The data is sent

to BI parallel.

If the report is scheduled to be executed every 30 minutes, it might happen that it

coincides with the BI Delta InfoPackage execution. In that case some records will be

written to the delta queues twice from both processes.

This is not an issue, as further processing in the BI system using a DataStore Object with

delta handling capabilities will automatically filter out the duplicated records during the

data activation. Therefore the parallel execution of this encapsulated report with the BI

delta InfoPackage does not cause any data inconsistencies in BI. (Please refer also to

SAP Note 844222.)

- 5 -

4.2 Step by Step Guide

1. Create a new Logical System using

the transaction BD54.

This Logical System name is used in

the report as a constant:

c_dlogsys TYPE logsys VALUE 'DUMMY_BW'

In this example, the name of the

Logical System is ‘DUMMY_BW’.

The constant in the report needs to

be changed accordingly to the

defined Logical System name in this

Step.

2. Implement an executable ABAP

report

YBW_FI_GL_4_DELTA_COLLECT

in transaction SE38.

The code for this ABAP report can

be found it the appendix.

- 6 -

3. Maintain the selection texts of the

report.

In the ABAP editor

In the menu, choose Goto Text

Elements Selection Texts

4. Maintain the text symbols of the

report.

In the ABAP editor

In the menu, choose Goto Text

Elements Text Symbols

- 7 -

5. Create a variant for the report. The

"Target BW System" has to be an

existing BI system for which a delta

initialization exists.

In transaction SE38, click Variants

6. Schedule the report via transaction

SM36 to be executed every 30

minutes, using the variant created in

step 5.

Code

*&---------------------------------------------------------------------*

*& Report YBW_FI_GL_4_DELTA_COLLECT

*&

*&---------------------------------------------------------------------*

*&

*& This report collects new and changed documents for the 0FI_GL_4 from

*& the FI application tables and writes them to the delta queues of all

*& connected BW system.

*&

*& The BW extractor itself therefore needs only to process a small

*& amount of records from the application tables to the delta queue,

*& before the content of the delta queue is sent to the BW system.

*&

*&---------------------------------------------------------------------*

REPORT ybw_fi_gl_4_delta_collect.

TYPE-POOLS: sbiw.

* Constants

* The 'DUMMY_BW' constant is the same as defined in Step 1 of the How to guide

CONSTANTS: c_dlogsys TYPE logsys VALUE 'DUMMY_BW',

c_oltpsource TYPE roosourcer VALUE '0FI_GL_4'.

* Filed symbols

FIELD-SYMBOLS: TYPE roosprmsc,

TYPE roosprmsf.

* Variables

DATA: l_slogsys TYPE logsys,

l_tfstruc TYPE rotfstruc,

l_lines_read TYPE sy-tabix,

l_subrc TYPE sy-subrc,

l_s_rsbasidoc TYPE rsbasidoc,

l_s_roosgen TYPE roosgen,

l_s_parameters TYPE roidocprms,

l_t_fields TYPE TABLE OF rsfieldsel,

l_t_roosprmsc TYPE TABLE OF roosprmsc,

l_t_roosprmsf TYPE TABLE OF roosprmsf.

* Selection parameters

SELECTION-SCREEN: BEGIN OF BLOCK b1 WITH FRAME TITLE text-001.

SELECTION-SCREEN SKIP 1.

PARAMETER prlogsys LIKE tbdls-logsys OBLIGATORY.

SELECTION-SCREEN: END OF BLOCK b1.

AT SELECTION-SCREEN.

* Check logical system

SELECT COUNT * FROM tbdls BYPASSING BUFFER

WHERE logsys = prlogsys.

IF sy-subrc <> 0.

MESSAGE e454(b1) WITH prlogsys.

* The logical system & has not yet been defined

ENDIF.

START-OF-SELECTION.

* Check if logical system for dummy BW is defined (Transaction BD54)

SELECT COUNT * FROM tbdls BYPASSING BUFFER

WHERE logsys = c_dlogsys.

IF sy-subrc <> 0.

MESSAGE e454(b1) WITH c_dlogsys.

* The logical system & has not yet been defined

ENDIF.

* Get own logical system

CALL FUNCTION 'RSAN_LOGSYS_DETERMINE'

EXPORTING

i_client = sy-mandt

IMPORTING

e_logsys = l_slogsys.

* Check if transfer rules exist for this extractor in BW

SELECT SINGLE * FROM roosgen INTO l_s_roosgen

WHERE oltpsource = c_oltpsource

AND rlogsys = prlogsys

AND slogsys = l_slogsys.

IF sy-subrc <> 0.

MESSAGE e025(rj) WITH prlogsys.

* No transfer rules for target system &

ENDIF.

* Copy record for dummy BW system

l_s_roosgen-rlogsys = c_dlogsys.

MODIFY roosgen FROM l_s_roosgen.

IF sy-subrc <> 0.

MESSAGE e053(rj) WITH text-002.

* Update of table ROOSGEN failed

ENDIF.

* Assignment of source system to BW system

SELECT SINGLE * FROM rsbasidoc INTO l_s_rsbasidoc

WHERE slogsys = l_slogsys

AND rlogsys = prlogsys.

IF sy-subrc <> 0 OR

( l_s_rsbasidoc-objstat = sbiw_c_objstat-inactive ).

MESSAGE e053(rj) WITH text-003.

* Remote destination not valid

ENDIF.

* Copy record for dummy BW system

l_s_rsbasidoc-rlogsys = c_dlogsys.

MODIFY rsbasidoc FROM l_s_rsbasidoc.

IF sy-subrc <> 0.

MESSAGE e053(rj) WITH text-004.

* Update of table RSBASIDOC failed

ENDIF.

* Delta initializations

SELECT * FROM roosprmsc INTO TABLE l_t_roosprmsc

WHERE oltpsource = c_oltpsource

AND rlogsys = prlogsys

AND slogsys = l_slogsys.

IF sy-subrc <> 0.

MESSAGE e020(rsqu).

* Some of the initialization requirements have not been completed

ENDIF.

LOOP AT l_t_roosprmsc ASSIGNING .

IF -initstate = ' '.

MESSAGE e020(rsqu).

* Some of the initialization requirements have not been completed

ENDIF.

-rlogsys = c_dlogsys.

-gottid = ''.

-gotvers = '0'.

-gettid = ''.

-getvers = '0'.

ENDLOOP.

* Delete old records for dummy BW system

DELETE FROM roosprmsc

WHERE oltpsource = c_oltpsource

AND rlogsys = c_dlogsys

AND slogsys = l_slogsys.

* Copy records for dummy BW system

MODIFY roosprmsc FROM TABLE l_t_roosprmsc.

IF sy-subrc <> 0.

MESSAGE e053(rj) WITH text-005.

* Update of table ROOSPRMSC failed

ENDIF.

* Filter values for delta initializations

SELECT * FROM roosprmsf INTO TABLE l_t_roosprmsf

WHERE oltpsource = c_oltpsource

AND rlogsys = prlogsys

AND slogsys = l_slogsys.

IF sy-subrc <> 0.

MESSAGE e020(rsqu).

* Some of the initialization requirements have not been completed

ENDIF.

LOOP AT l_t_roosprmsf ASSIGNING .

-rlogsys = c_dlogsys.

ENDLOOP.

* Delete old records for dummy BW system

DELETE FROM roosprmsf

WHERE oltpsource = c_oltpsource

AND rlogsys = c_dlogsys

AND slogsys = l_slogsys.

* Copy records for dummy BW system

MODIFY roosprmsf FROM TABLE l_t_roosprmsf.

IF sy-subrc <> 0.

MESSAGE e053(rj) WITH text-006.

* Update of table ROOSPRMSF failed

ENDIF.

*************************************

* COMMIT WORK for changed meta data *

*************************************

COMMIT WORK.

* Delete RFC queue of dummy BW system

* (Just in case entries of other delta requests exist)

CALL FUNCTION 'RSC1_TRFC_QUEUE_DELETE_DATA'

EXPORTING

i_osource = c_oltpsource

i_rlogsys = c_dlogsys

i_all = 'X'

EXCEPTIONS

tid_not_executed = 1

invalid_parameter = 2

client_not_found = 3

error_reading_queue = 4

OTHERS = 5.

IF sy-subrc <> 0.

MESSAGE ID sy-msgid TYPE sy-msgty NUMBER sy-msgno

WITH sy-msgv1 sy-msgv2 sy-msgv3 sy-msgv4.

ENDIF.

*******************************************

* COMMIT WORK for deletion of delta queue *

*******************************************

COMMIT WORK.

* Get MAXLINES for data package

CALL FUNCTION 'RSAP_IDOC_DETERMINE_PARAMETERS'

EXPORTING

i_oltpsource = c_oltpsource

i_slogsys = l_slogsys

i_rlogsys = prlogsys

i_updmode = 'D '

IMPORTING

e_s_parameters = l_s_parameters

e_subrc = l_subrc.

.

IF l_subrc <> 0.

MESSAGE e053(rj) WITH text-007.

* Error in function module RSAP_IDOC_DETERMINE_PARAMETERS

ENDIF.

* Transfer structure depends on transfer method

CASE l_s_roosgen-tfmethode.

WHEN 'I'.

l_tfstruc = l_s_roosgen-tfstridoc.

WHEN 'T'.

l_tfstruc = l_s_roosgen-tfstruc.

ENDCASE.

* Determine transfer structure field list

PERFORM fill_field_list(saplrsap) TABLES l_t_fields

USING l_tfstruc.

* Start the delta extraction for the dummy BW system

CALL FUNCTION 'RSFH_GET_DATA_SIMPLE'

EXPORTING

i_requnr = 'DUMMY'

i_osource = c_oltpsource

i_showlist = ' '

i_maxsize = l_s_parameters-maxlines

i_maxfetch = '9999'

i_updmode = 'D '

i_rlogsys = c_dlogsys

i_read_only = ' '

IMPORTING

e_lines_read = l_lines_read

TABLES

i_t_field = l_t_fields

EXCEPTIONS

generation_error = 1

interface_table_error = 2

metadata_error = 3

error_passed_to_mess_handler = 4

no_authority = 5

OTHERS = 6.

IF sy-subrc <> 0.

MESSAGE ID sy-msgid TYPE sy-msgty NUMBER sy-msgno

WITH sy-msgv1 sy-msgv2 sy-msgv3 sy-msgv4.

ENDIF.

*********************************

* COMMIT WORK for delta request **********************************

COMMIT WORK.

* Delete RFC queue of dummy BW system

CALL FUNCTION 'RSC1_TRFC_QUEUE_DELETE_DATA'

EXPORTING

i_osource = c_oltpsource

i_rlogsys = c_dlogsys

i_all = 'X'

EXCEPTIONS

tid_not_executed = 1

invalid_parameter = 2

client_not_found = 3

error_reading_queue = 4

OTHERS = 5.

IF sy-subrc <> 0.

MESSAGE ID sy-msgid TYPE sy-msgty NUMBER sy-msgno

WITH sy-msgv1 sy-msgv2 sy-msgv3 sy-msgv4.

ENDIF.

* Data collection for 0FI_GL_4 delta queue successful

MESSAGE s053(rj) WITH text-008.

-->